Computer vision systems are trained on large datasets composed of hundreds of thousands of images. Many of these images have been previously interpreted, classified, and labeled by human data annotators. Through data annotation, humans set the tone of what machines will learn to “see“ and how they will interpret the world.

In formal terms, data annotation for computer vision involves tasks such as curation, labeling, keywording, as well as semantic segmentation which consists of marking and separating the different objects contained in a picture. But beyond formal task descriptions, annotation work is fundamentally about making sense of data, that is, about interpreting the information contained in each image. So not only data but also its interpretations are fundamental to computer vision.

In the same way that training data conditions systems, datasets are conditioned by the production contexts in which they are created, developed, and deployed. Besides technical exercise and operation, working with computer vision datasets involves mastering forms of interpretation. Consequently, examining data provenance and the work practices involved in data production is essential for investigating subjective worldviews and assumptions embedded in datasets and models.

Workers’ Subjectivity or Top-Down Imposition?

In a recent article published in the Proceedings of the ACM on Human-Computer Interaction, we presented a study into work practices of image annotation as performed in industry settings. The research work is based on several weeks of observations and interviews conducted in Argentina and Bulgaria, at two companies specialized in data annotation for computer vision. We investigated the role of workers’ subjectivity in the classification and labeling of images and described structures and standards that shape the interpretation of data.

Like many other investigations, our study challenges the aura of calculative neutrality often ascribed to algorithmic systems by setting the spotlight on the humans behind the machine. However, while previous research widely focuses on analyzing and mitigating workers’ biases, our findings show that annotators are not entirely free in their interpretations and thus cannot imbed biases in data on their own.

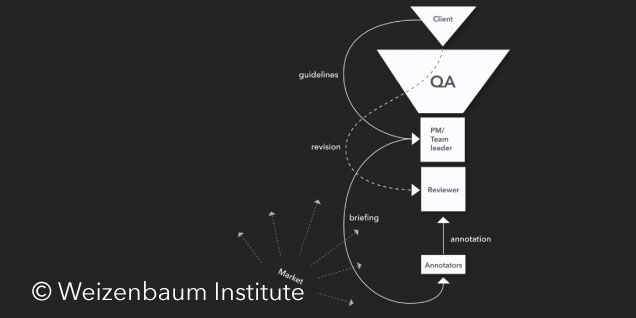

Several issues commonly framed as bias are, in fact, manifestations of power differentials that fundamentally shape data. Such power differentials are as trivial as being the client requesting and paying for annotations or being the annotator whose job is on the line if they do not follow instructions. In these contexts, annotators follow the exact instructions provided by clients, annotate data according to predefined labels, and trust managers’ judgment if ambiguities should arise. Clients have the power to impose their preferred interpretations and to define which labels best define each image because they are the ones paying for the annotations. Therefore, the interpretation of data that annotators perform is profoundly constrained by the interests, values, and priorities of those stakeholders with more (financial) power.

In short, power differentials shape computer vision datasets much more than annotators’ subjectivities. Data is annotated throughout hierarchical structures and according to predefined expectations and interests. Briefings, guidelines, management supervision, and quality control all aim at meeting the demands of clients. Interpretations and labels are vertically imposed on annotators, and through them, on data.

This imposition is mostly naturalized as business as usual because it seems common sense that annotations be carried out according to the preferences of paying clients. However, the naturalization of such hierarchical processes conceals the fact that computer vision systems learn to “see” and judge reality according to the interests of those with the financial means to impose their worldviews on data.

Implications for Industry and Research

Annotating data is often presented as a technical matter. This investigation shows it is, in fact, an exercise of power with multiple implications for systems and society. So, what do our findings mean for industry and research?

First, these findings show that computer vision systems are crafted by human labor and reflective of commercial interests. Unfortunately, this fact is often rendered invisible by the enthusiasm of technologists. Before the smartest system is able to “see”, humans first need to make sense of the data that feeds it. Industry should acknowledge the human work that goes into creating computer vision systems, and research should look deeper into the working conditions and the power dynamics that profoundly shape datasets, labeling processes, and systems.

Second, we argue that techniques and methods to mitigate annotators’ bias will only remain partially effective as long as production contexts and dynamics of power and imposition remain unaccounted for. Starting from the assumption that power imbalances are the problem, instead of individual biases, leads to fundamentally different research questions and methods of inquiry. A shift of perspective in research is needed to move beyond approaches that tend to allocate responsibility for data-related issues with data annotators exclusively.

Finally, we advocate for the systematic documentation of computer vision datasets. Documentation should, of course, reflect datasets’ technical features, but it should also be able to make explicit the actors, hierarchies, and rationale behind the labels assigned to data. Because if the context in which data has been annotated remains opaque, we will not be able to understand the assumptions and impositions encoded in datasets and reproduced by computer vision systems.

Find further information on the study in the respective papers:

Between Subjectivity and Imposition: Power Dynamics in Data Annotation for Computer Vision.

Milagros Miceli, Martin Schuessler, and Tianling Yang. Proc. ACM Hum.-Comput. Interact. 4, CSCW2, Article 115 (October 2020), 25 pages. PDF

Documenting Computer Vision Datasets: An Invitation to Reflexive Data Practices.

Milagros Miceli, Tianling Yang, Laurens Naudts, Martin Schuessler, Diana Serbanescu, Alex Hanna. FAccT ’21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 2021. PDF